Addressing the ethics of artificial intelligence is important. But are we becoming so obsessed with the rights and wrongs of AI that we’re taking our eye off the potential risks?

In a recent presentation that was part of a National Academies of Science-sponsored symposium series, I argued that we need to pay more attention to easy-to-overlook risks presented by AI, and how we can effectively navigate them.

The symposium was focused on how artificial intelligence and machine learning transform the human condition, and was hosted by Los Alamos National Laboratory, The National Academies of Science, Engineering and Medicine, and the National Nuclear Security Administration. Presentations included broad perspectives on AI and society from leading experts that included Stuart Russell (University of California, Berkeley) and Fei Fei Li (Stanford University), and perspectives on specific challenges around developing beneficial applications from experts such as Philip Sabes (Starfish Neuroscience, LLC and University of California, San Francisco) and Lindsey Sheppard (Center for Strategic & International Studies).

My presentation (see the video below) focused on the need for more innovative approaches to the potential risks presented by AI. You can watch the presentation below.

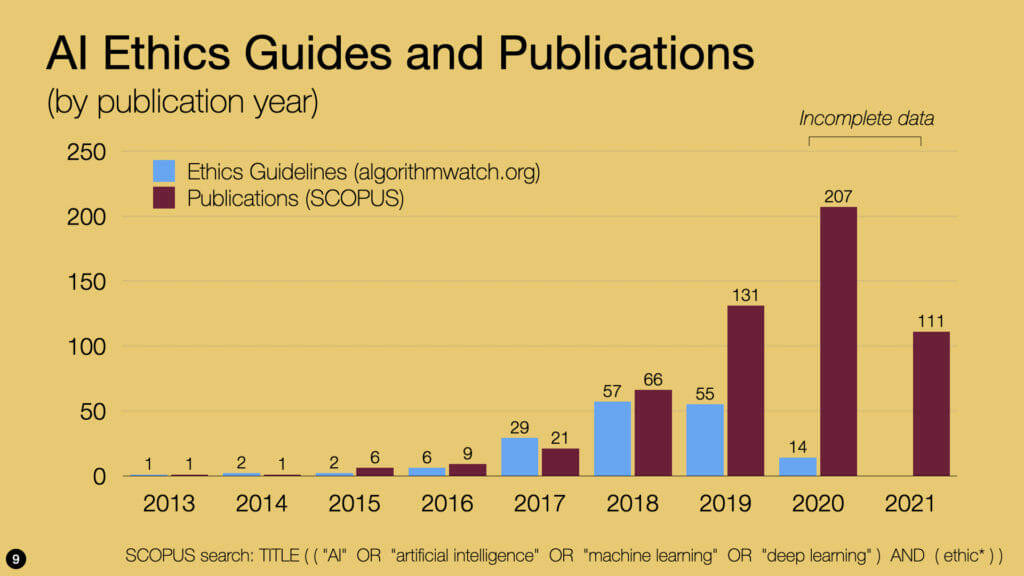

Part of the argument I make here is that, while there’s been a surge in interest in studying the ethics of AI and developing ethical guidelines, there’s been comparatively little work on how we address the risk-implications of artificial intelligence.

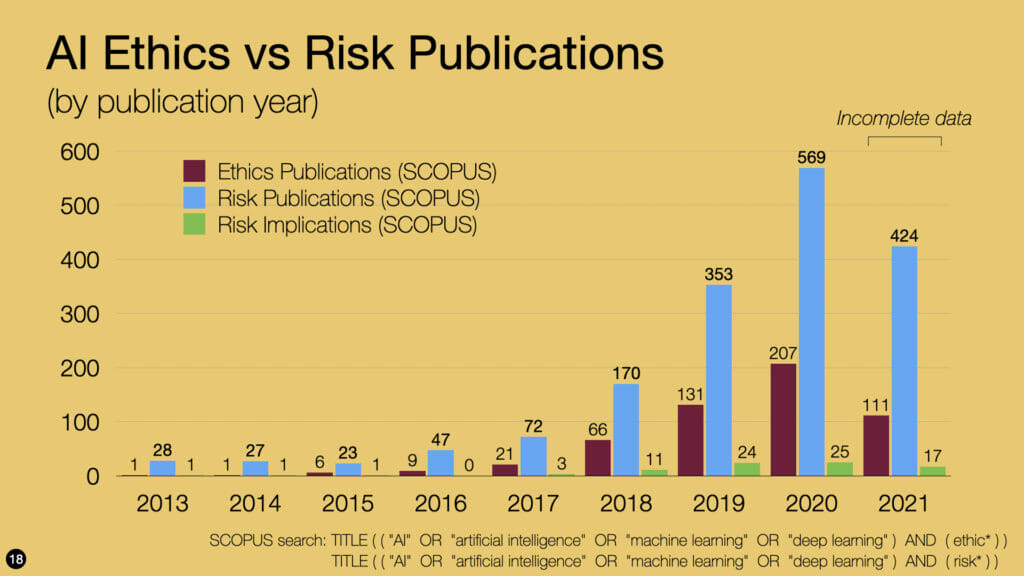

This is seen in the slides below. The first (figure 1) shows the growth in AI ethics guides and academic papers over the past few years. The second (figure 2) shows comparative trends in academic papers addressing AI and risk.

As I note in the presentation, I was initially pleasantly surprised by the data in figure 2 as it seemed to indicate that people were treating AI risk seriously. However, on closer examination it became apparent that the vast majority of papers focus on how to use AI in more effectively assessing and managing non-AI risks, rather than addressing the risks presented by AI.

When these were filtered out, the data presented a very different picture, and one that indicates just how little research or thought seems to be going into the potential risks of AI and how to navigate these.

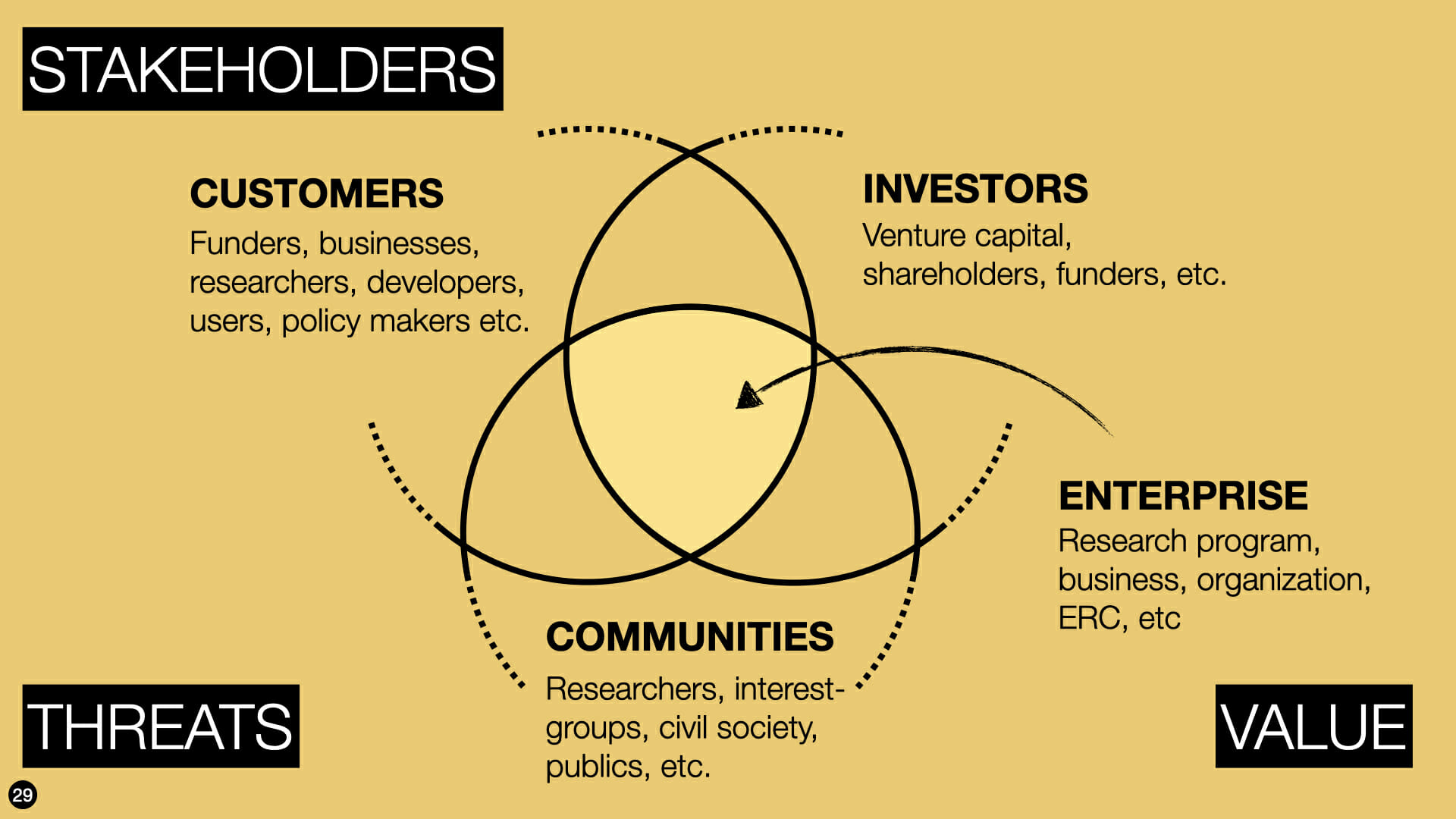

Clearly, there’s work to be done here if we’re to ensure that AI enhances the future opportunities we’re facing rather than diminishes them. The good news is that innovative approaches to risk such as the ones we use in the ASU Risk Innovation Nexus can help us navigate toward more beneficial uses of AI-based technologies.

The full slide deck can be downloaded here.

Andrew Maynard

Director, ASU Future of Being Human initiative

Substack: The Future of Being Human